Biography

Hi! I’m Mayank Sharma, an AI & Robotics Engineer with an M.Eng. in Robotics from the University of Maryland (’24). At Ryght Inc. I built LLM‑powered diagnostic tooling, including a side‑by‑side chatbot‑comparison framework and a radiology triage module that together cut manual review time by roughly 35 percent. Earlier, as a Computer Vision Engineer at Kick Robotics, I designed the perception stack and autonomy logic for a warehouse AMR that navigates aisles, avoids obstacles, and monitors carbon‑monoxide levels. My research at the Robotics Algorithms & Autonomous Systems Lab focused on Next‑Best‑View planning and deep‑learning‑driven 3‑D reconstruction to improve object mapping, and at IIT Bombay I co‑designed a magnetic UAV docking mechanism that extended quad‑rotor flight time by 40 percent. I am proficient in Python, C++, ROS2/Nav2, OpenCV, PyTorch, MATLAB, TypeScript, React, and Tailwind CSS. Passionate about the intersection of autonomy, perception, and large language models, I am actively exploring new opportunities and am open to relocation.

- Autonomous Systems

- Computer Vision

- Path Planning

- Control Systems

Masters in Robotics, 2024

University of Maryland

B.Tech in Mechatronics, 2022

NMIMS University

Experience

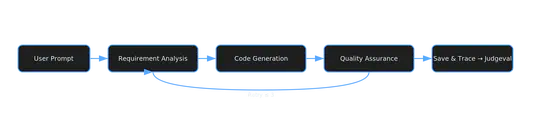

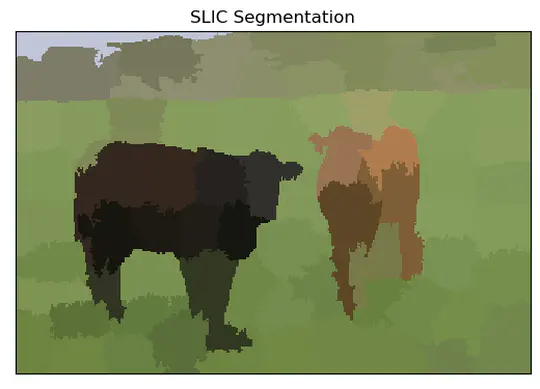

- Spearheading a modular Pokemon card grading workflow, streamlining pre-processing to support automated quality evaluation.

- Developed a Pokemon card segmentation pipeline by combining YOLOv12 detection with SAM2 fine-grained segmentation, achieving 98% mean IoU across varied lighting and backgrounds.

- Built a perspective correction pipeline using RANSAC edge fitting and homography warping, improving alignment precision by 30%.

- Built a chat app in React for side-by-side LLM comparison with editbale prompt templates and settings improving testing efficiency.

- Revamped product features across Ryght AI’s clinical trial platform by modernizing legacy UI with React, TypeScript, Next.js 13, and Tailwind CSS, delivering a cohesive and user-friendly interface for sponsors and partners.

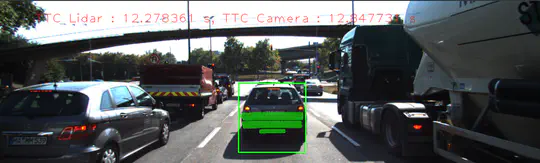

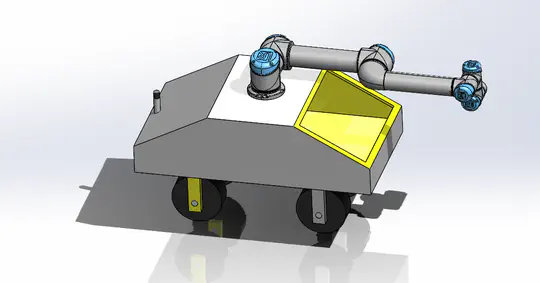

- Developed an autonomous mobile robot (AMR) using Nvidia Jetson, ROS2 Nav2 for mapping, navigation, and carbon monoxide monitoring, with EKF-based fusion of wheel odometry and IMU data for robust state estimation.

- Implemented a custom ROS2 Nav2 costmap plugin for an AMR that used YOLOv8n for real-time pixel-level image classification.

- Optimized the segmentation model with TensorRT, achieving 30% faster inference hence improving warehouse navigation efficiency.

- Automated model training on AWS using CloudFormation to orchestrate EC2 instances, managed data on S3, trigger SNS notifications via Lambda, and monitored on CloudWatch.

- Streamlined CI/CD by containerizing ROS2 packages with Docker and building SIL simulations with unit/integration tests, ensuring reliable AMR deployment and robust performance in mapping, navigation, and monitoring.

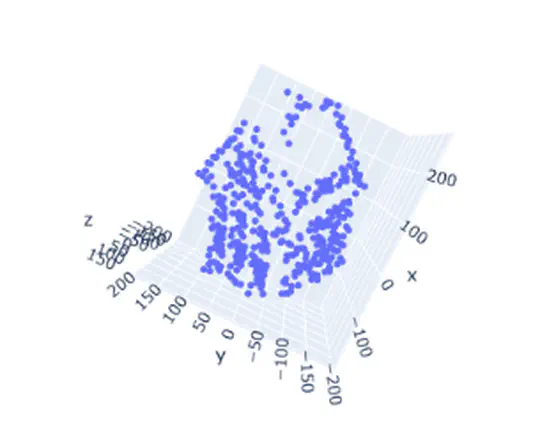

- Worked on optimizing 3D reconstruction and object mapping with Next-Best-View (NBV) planning by estimating image-based uncertainty to maximize information gain, and using deep learning with Gaussian splats to predict full models from partial views.

- Achieved ∼20cm landing accuracy with precision landing algorithm using April tags, OpenCV pose estimation in ROS and PX4.

- Increased UAV flight time by ∼ 720x (1 hour to 30 days) by designing CAD, manufacturing hardware, and developing firmware for a novel modular mid-air docking and battery-swapping mechanism, resulting in seamless integration of a robotic system.

- Researched nonlinear BLDC motor speed control methods and implemented a speed control algorithm based on sliding mode reaching law (SMRL) on MATLAB Simulink.

Projects

Competitions

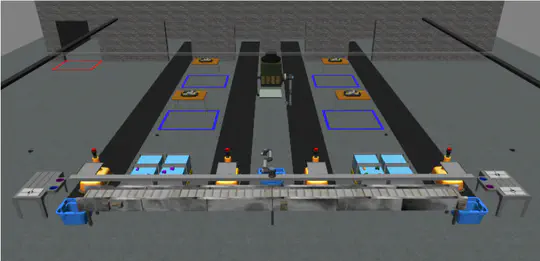

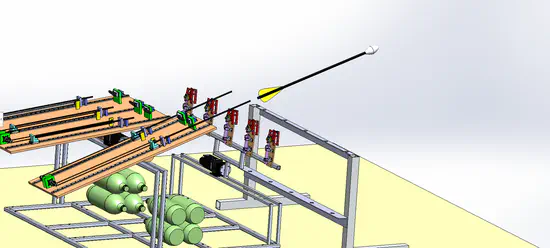

ABU Robocon 2021

Co-lead the team of 70 people overseeing departments such as Manufacturing, Designing, and Simulation to assemble and fabricate 2 robots from scratch to shoot arrows in a pot kept at some distance and achieved National Rank of 11 (Results).